Machine Code Explained -- Matt Godbolt

Explaining machine code from the ground up!

Explaining machine code from the ground up!

Machine Code Explained

by Matt Godbolt

From the video:

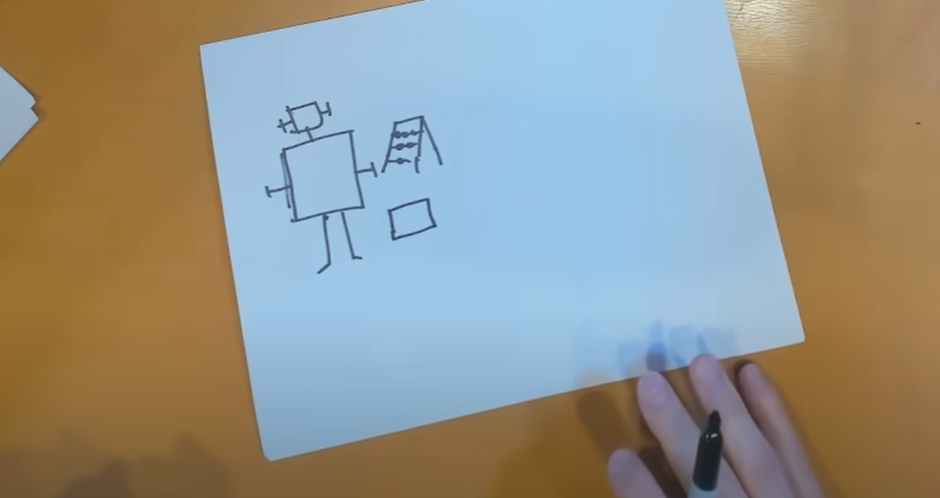

In this video, Matt Godbolt appears on Computerphile to discuss their fascination with how computers work and their mental model developed in the 1980s, which still helps them understand modern computer systems. They use a simple analogy involving a robot with an abacus and pigeonholes to explain fundamental computer operations and how programs are executed. Matt then demonstrates how machine code, the computer's language, can be represented as a sequence of numbers and stored in memory to instruct the computer in performing tasks, illustrating the basic concept of programming.